最初、複数のシートを選択して、普通に置換しようとしたのですがうまくいきませんでした。

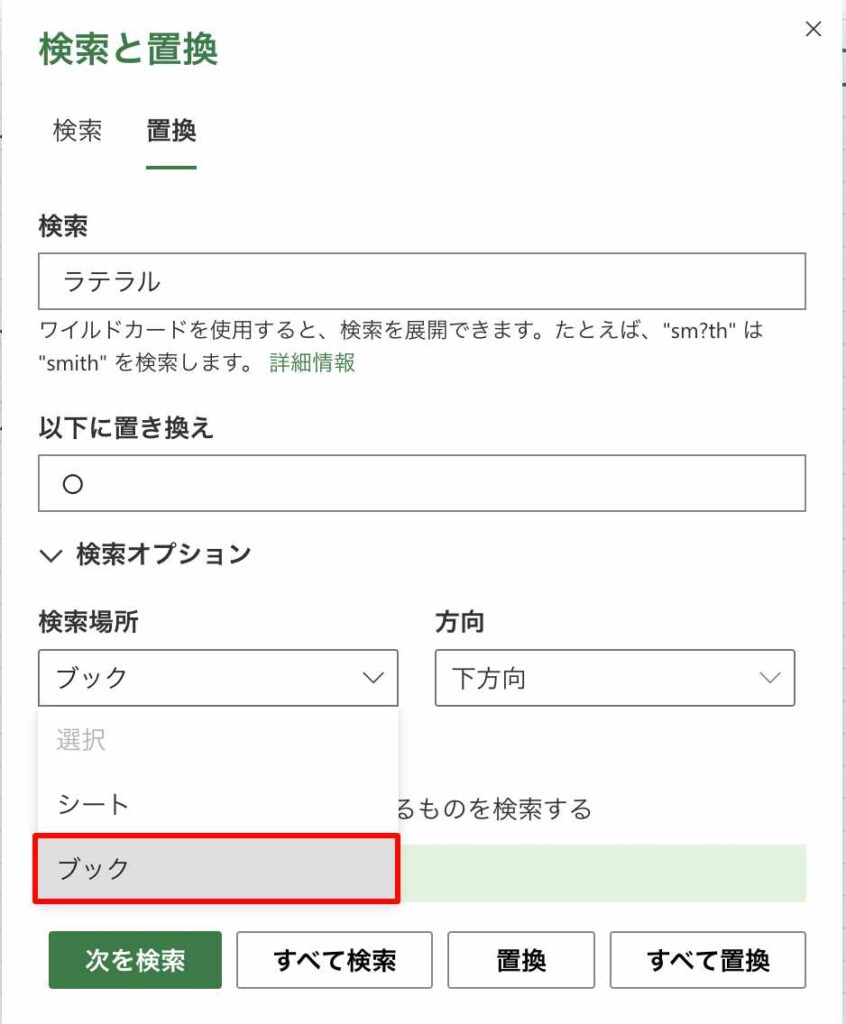

そこで少し調べてみたところ、置換のオプションで以下の通り、「シート」ではなく「ブック」を指定すればいいことがわかったので、ここに記載しておきます。

検索場所としてブックを指定

以下のように「検索オプション」の「検索場所」から「ブック」を指定し置換を行うと、すべてのシートに跨がって置換処理を行なってくれます。

最初、複数のシートを選択して、普通に置換しようとしたのですがうまくいきませんでした。

そこで少し調べてみたところ、置換のオプションで以下の通り、「シート」ではなく「ブック」を指定すればいいことがわかったので、ここに記載しておきます。

以下のように「検索オプション」の「検索場所」から「ブック」を指定し置換を行うと、すべてのシートに跨がって置換処理を行なってくれます。

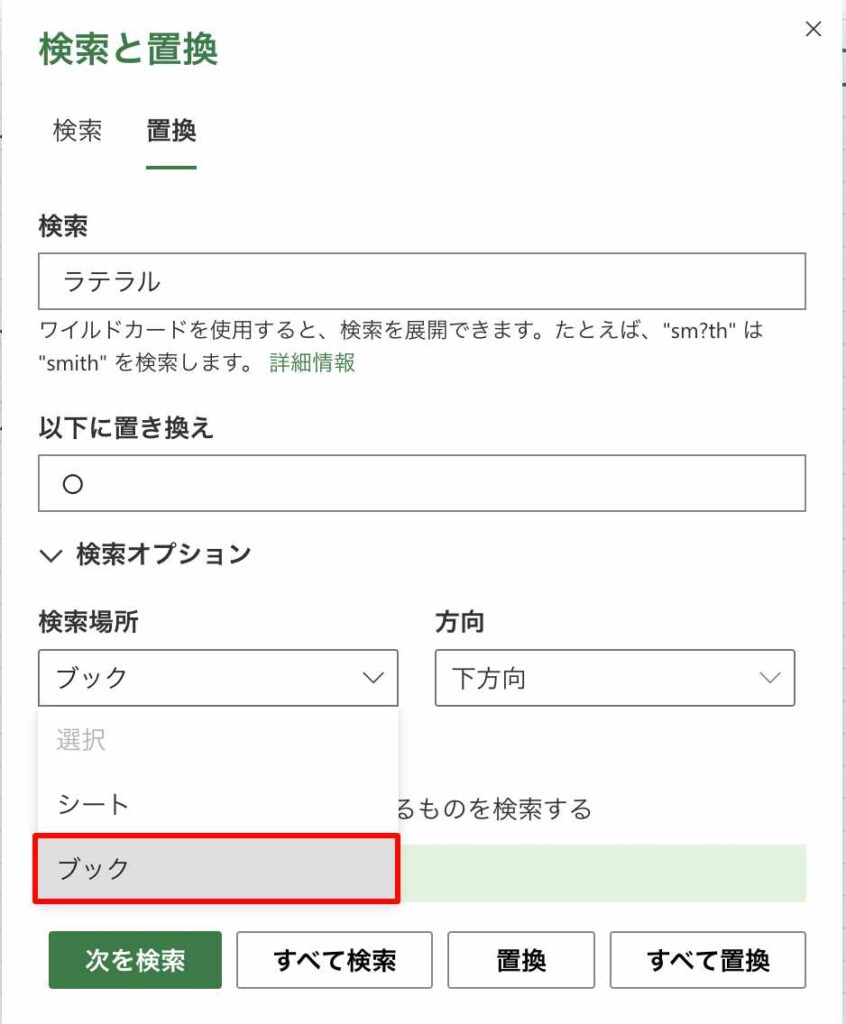

昨日、15年目の車検の為にマツダさんに車を預けてきたので、その時にかかった費用を記載しておきます。

私は毎回、マツダさんのパックdeメンテ(2年分の整備費)を利用しておりますが、その 91,520円を含めて、266,099円となりました。

以下、細かいところは省いていますが、費用の内訳です。

左側のヘッドライトがくすんできたのでクリーニングをお願いしたのと、バッテリーがそろそろ心配だったので交換してもらうことにしました。

また、今回、営業さんから停止表示灯としてパープルセイバーをお勧めされ、2,750円とそんなに高くなかったので購入してみることにしました。

横浜まで行くのが面倒なので川崎駅近くにあるソリッドスクエアの2階でパスポートの申請をしてきました。

川崎のパスポートセンターは思ったより広く、混雑が予想される年末年始にもかかわらず、9:30〜10:00の30分くらいで申請処理は完了しました。

なお、私は10年以上前に作成したパスポートの期限が切れていたので、申請時に以下のものが必要となりました。

必須

・顔写真

・戸籍謄本

・免許証などの証明書

あるとよい

・前のパスポート(期限切れ)

・マイナカード

ちなみに、戸籍謄本はマイナカードを持参していれば1階のコンビニ(ファミマ )で発行することが可能です。(このときにマイナカードのパスワード(4桁)が必要。)

また、1月4日に申請すると、最速で1月12日に受け取れるようです。

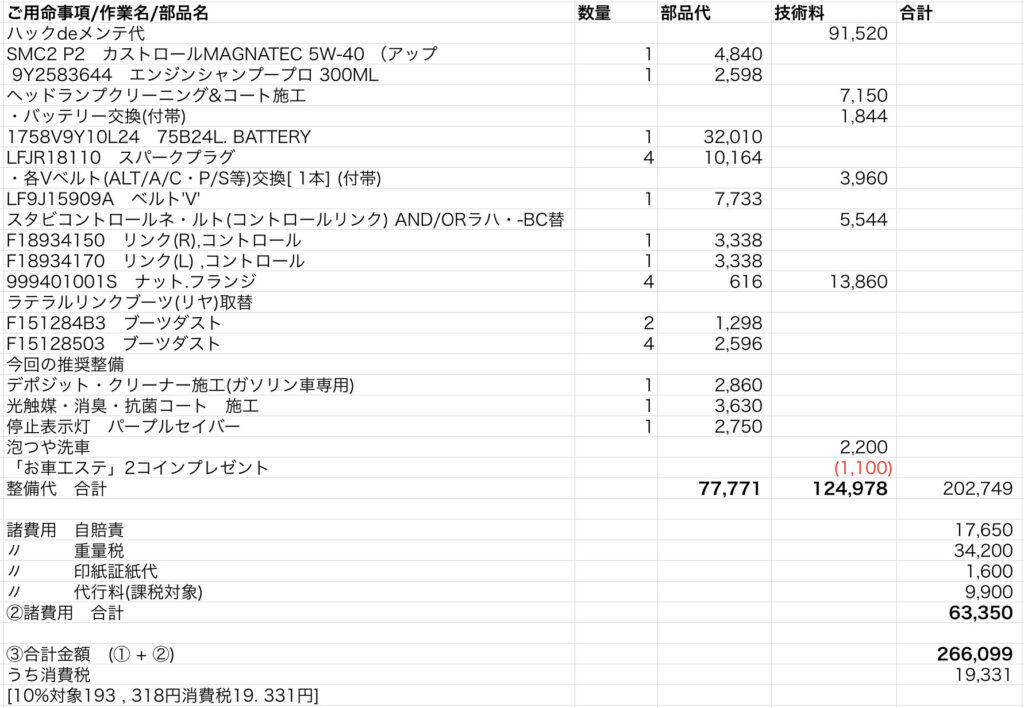

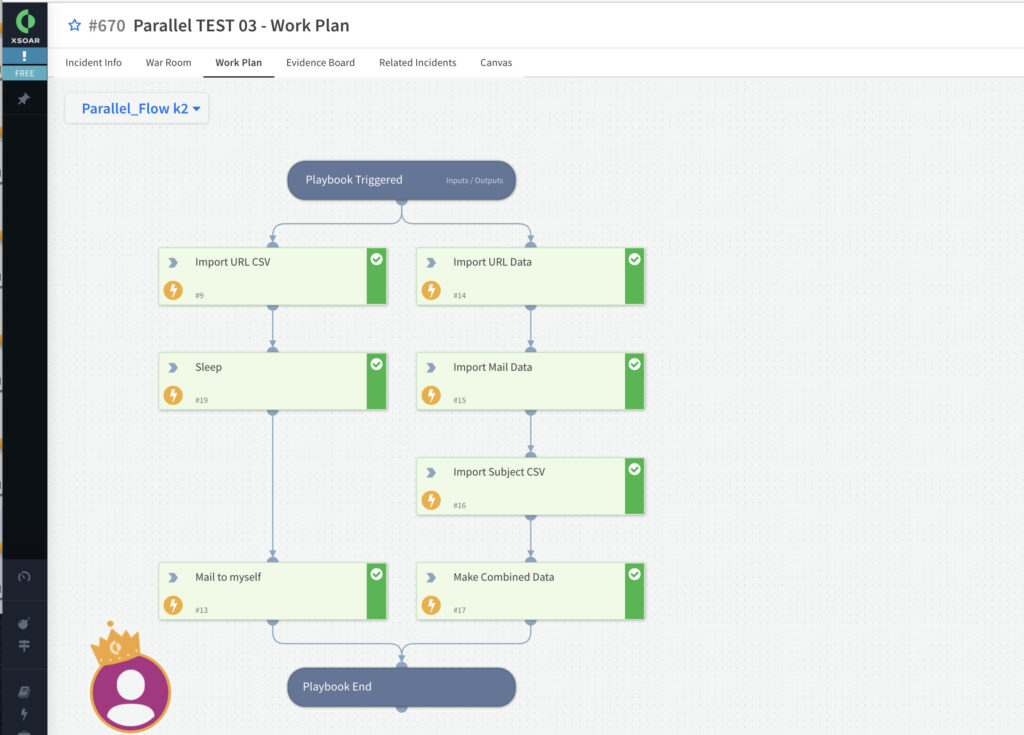

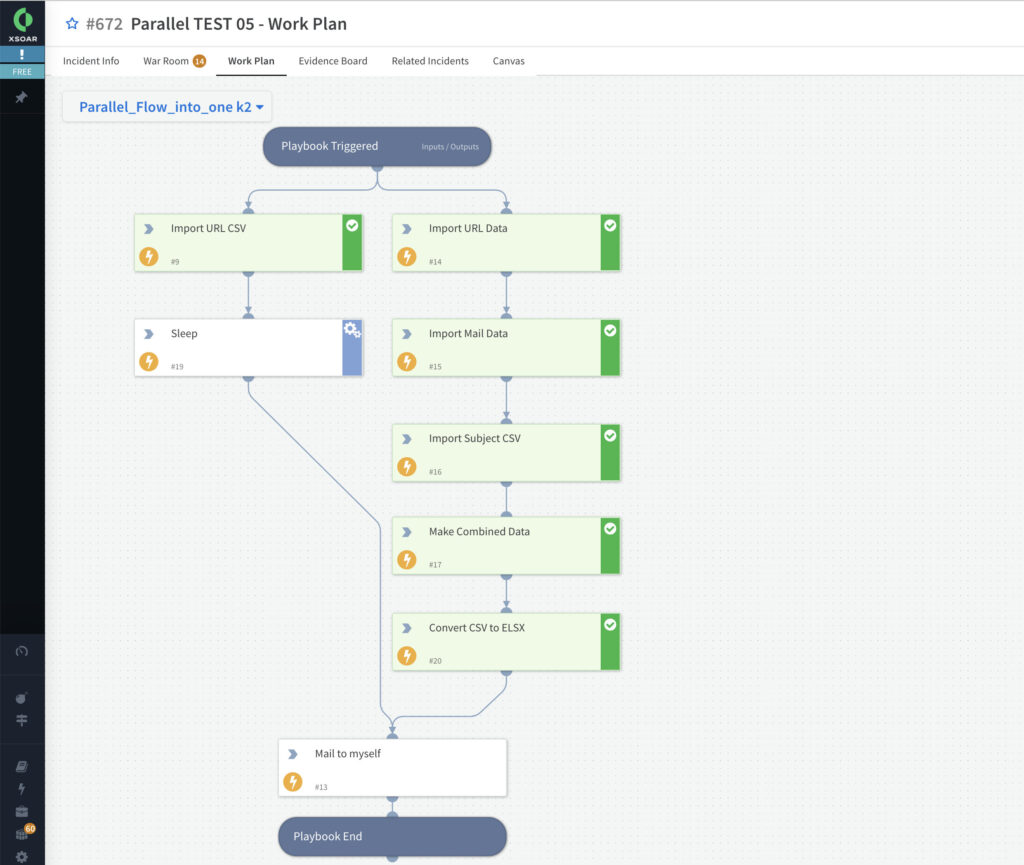

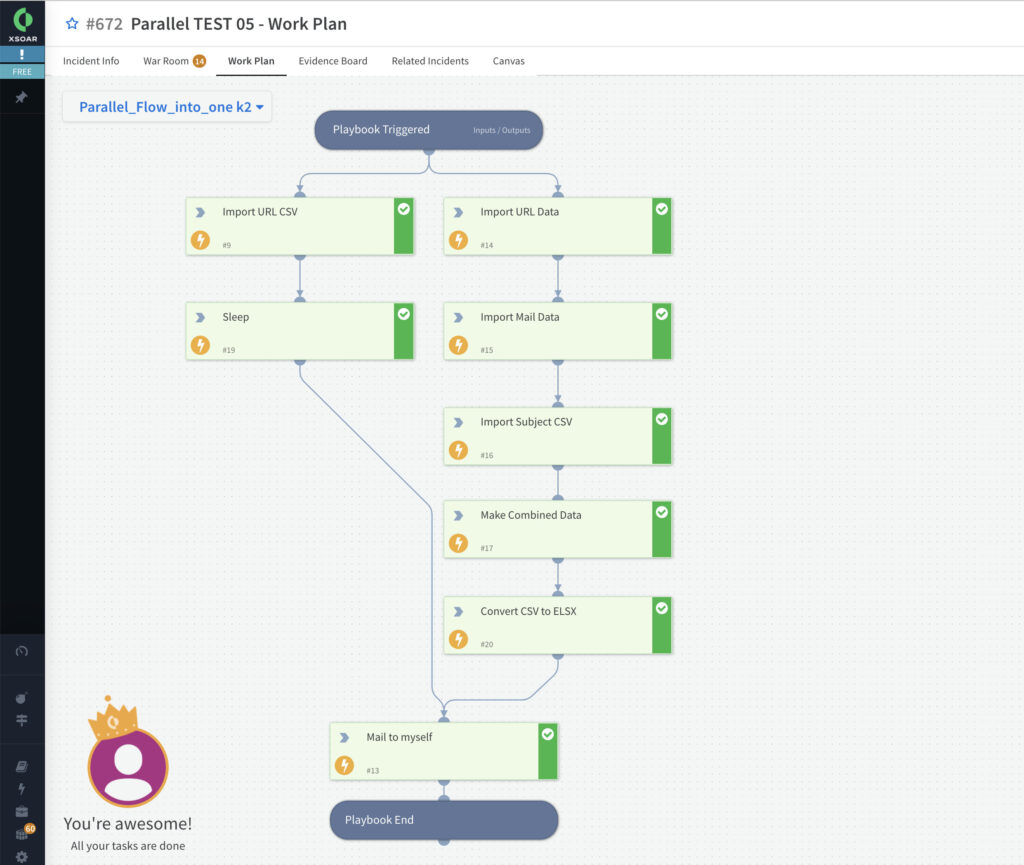

片方が最後までいっても、もう片方の処理が終わるまでプレイブックは動き続けます。

両方の処理が終わるまで待ち、最後の1つを実行します。

以下の3つのファイルを1つのJSONデータにするPythonプログラムをChatGPTに作成してもらいました。

mail-src,mail-subject aaa@yyy.com,"test ay1" aaa@yyy.com,"test ay2" aaa@zzz.com,"test az1" bbb@yyy.com,"test by1" bbb@zzz.com,"test bz1" ccc@yyy.com,"test cy1" ccc@zzz.com,"test cz1" uid,mail-src user-a,"aaa@yyy.com,aaa@zzz.com" user-b,"bbb@yyy.com,bbb@zzz.com" user-c,"ccc@yyy.com,ccc@zzz.com" dest,state,category,black,pc,uid,mail https://aaa.com:9001/aaa,pass,phishing,no,pc-a,user-a,aaa@xxx.com https://bbb.com:9001/bbb,block,c2,yes,pc-b,user-b,bbb@xxx.com https://ccc.com:9001/ccc,pass,c2,yes,pc-c,user-c,ccc@xxx.com

出来上がりのJSONイメージは以下の通りです。

[

{

"dest": "https://aaa.com:9001/aaa",

"state": "pass",

"category": "phishing",

"black": "no",

"pc": "pc-a",

"uid": "user-a",

"mail": "aaa@xxx.com",

"mail-src": {

"aaa@yyy.com": [

"test ay1",

"test ay2"

],

"aaa@zzz.com": [

"test az1"

]

}

},

{

"dest": "https://bbb.com:9001/bbb",

"state": "block",

"category": "c2",

"black": "yes",

"pc": "pc-b",

"uid": "user-b",

"mail": "bbb@xxx.com",

"mail-src": {

"bbb@yyy.com": [

"test by1"

],

"bbb@zzz.com": [

"test bz1"

]

}

},

{

"dest": "https://ccc.com:9001/ccc",

"state": "pass",

"category": "c2",

"black": "yes",

"pc": "pc-c",

"uid": "user-c",

"mail": "ccc@xxx.com",

"mail-src": {

"ccc@yyy.com": [

"test cy1"

],

"ccc@zzz.com": [

"test cz1"

]

}

}

]

そして実際にChatGPTに作成してもらった Pythonプログラムは以下の通りです。(すこし自分の環境に合わせて修正はしていますが。)

import csv

import json

# CSVファイルを読み込み、データを取得

#

# 最初の部分では、mail_src.csv、mail_data.csv、url_data.csv という

# 3つのファイルをそれぞれ辞書形式に読み込みます。

# これにより、各ファイルのデータが辞書のリストとして取得されます。

# それぞれの辞書内には、CSVのヘッダーがキーとして使用され、

# 各行のデータがそれぞれの辞書として格納されます。

with open('mail_src.csv', 'r') as file:

mail_src_data = list(csv.DictReader(file))

with open('mail_data.csv', 'r') as file:

uid_data = list(csv.DictReader(file))

with open('url_data.csv', 'r') as file:

dest_data = list(csv.DictReader(file))

# メールアドレスと件名をマッピングする辞書を作成

#

# mail_src.csv ファイルの内容から、メールアドレスと件名のマッピングを

# 作成します。mail_subject_map という辞書を作成し、

# メールアドレスをキーにして、それに関連する件名をリストとして格納します。

mail_subject_map = {}

for entry in mail_src_data:

mail = entry['mail-src']

subject = entry['subject'].replace('\u201d', '').replace('\"', '')

if mail in mail_subject_map:

mail_subject_map[mail].append(subject)

else:

mail_subject_map[mail] = [subject]

# JSONデータを構築

#

# dest_data の情報を元にして新しい JSON データを構築します。

# 各行の情報を元に、dest_entry という辞書を作成し、'dest'、'state'、

# 'category'、'black'、'pc'、'uid'、'mail'というキーを持たせます。

json_data = []

for entry in dest_data:

uid = entry['uid']

mail = entry['mail']

dest_entry = {

'dest': entry['dest'],

'state': entry['state'],

'category': entry['category'],

'black': entry['black'],

'pc': entry['pc'],

'uid': uid,

'mail': mail,

'mail-src': {}

}

# mail-src内のデータを整形

#

# 'mail-src' キーに関連する情報を整形します。

# これは、mail_data.csv と mail_src.csv の情報を組み合わせ、

# 指定された形式で'mail-src' キーの中身を構築するための処理です。

if uid in [row['uid'] for row in uid_data]:

mail_src = [x.strip() for x in next(row['mail-src'] for row in uid_data if row['uid'] == uid).split(',')]

for src in mail_src:

if src in mail_subject_map:

dest_entry['mail-src'][src] = mail_subject_map[src]

json_data.append(dest_entry)

# JSON形式に変換して出力

#

# json.dumps() を使用して json_output に JSON 形式のデータを出力します。

# これにより、json_output には指定された形式の JSON データが格納されます。

# そして、print(json_output) により、この JSON データが出力されます。

json_output = json.dumps(json_data, indent=2)

print(json_output)

人間が作るとなるとPythonに詳しい人でも数時間はかかりそうですが、ChatGPT は一瞬で作ってしまうところがすごいですね。

また、私はPython初心者なので、見たことないような関数を使われていると勉強になります。

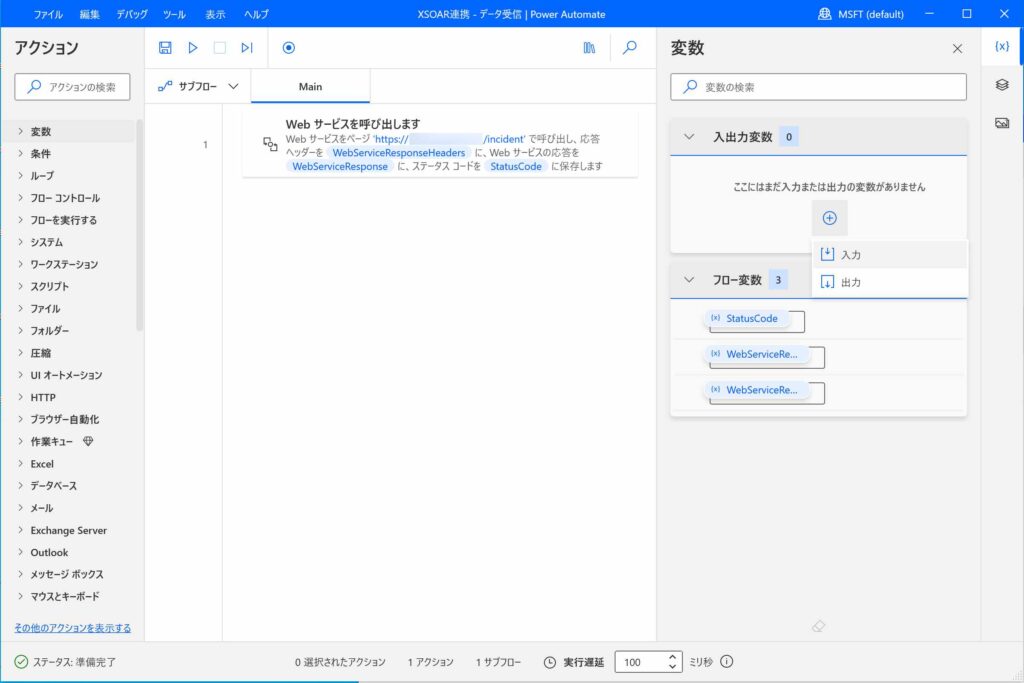

以前、Power Automate(クラウド)から Power Automate for Desktop にてあらかじめ作成しておいた XSOAR のインシデント作成タスクを呼び出したことがありました。

しかしその時は、Power Automate(クラウド)から何もデータを渡していなかったので今回は、Power Automate(クラウド)から入力されたデータを基にXSOAR のインシデントを作成する形でタスクを呼び出してみました。

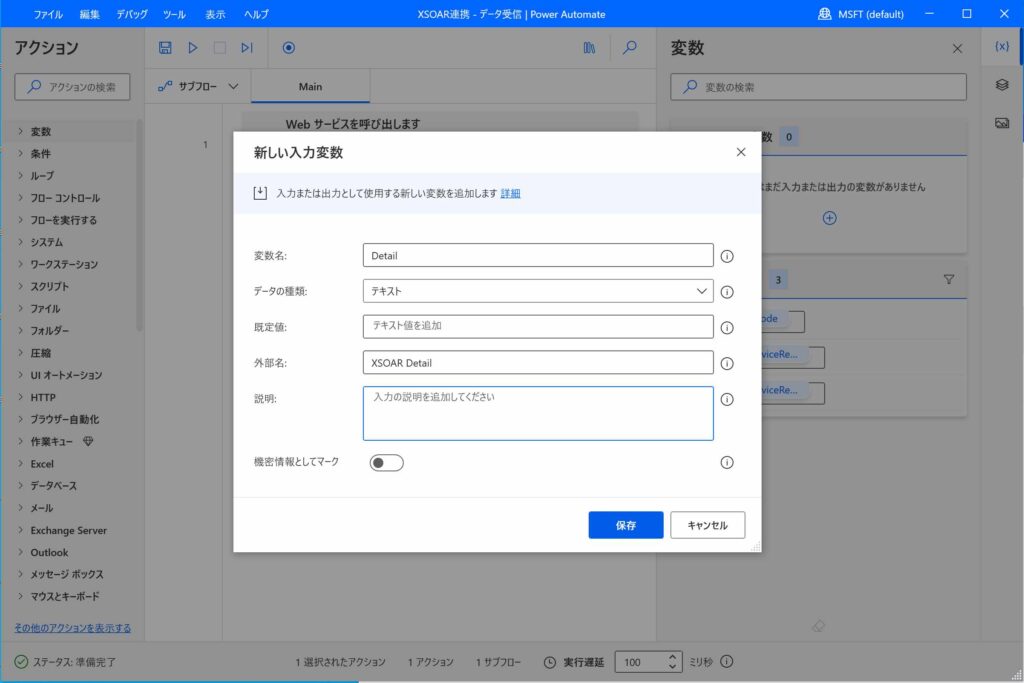

Power Automate(クラウド)からデータを受け取る場合、Power Automate for Desktop側であらかじめ入出力変数を設定しておかなければなりません。

そこで今回は以下の2つを入力用変数として作成しました。

・Detail

・Date

作成の方法ですが、Power Automate for Desktopのフロー作成画面に入ると右ペインに「入出力変数」の設定があるので、そこから「入力」を選択します。

すると以下のようなポップアップが表示されるので、画面に表示されている項目を入力していきます。(変数名は「Detail」を設定)

なお、これらの項目のうち、「外部名」に設定した値が、後からPower Automate(クラウド)で設定する際に設定画面に表示されます。

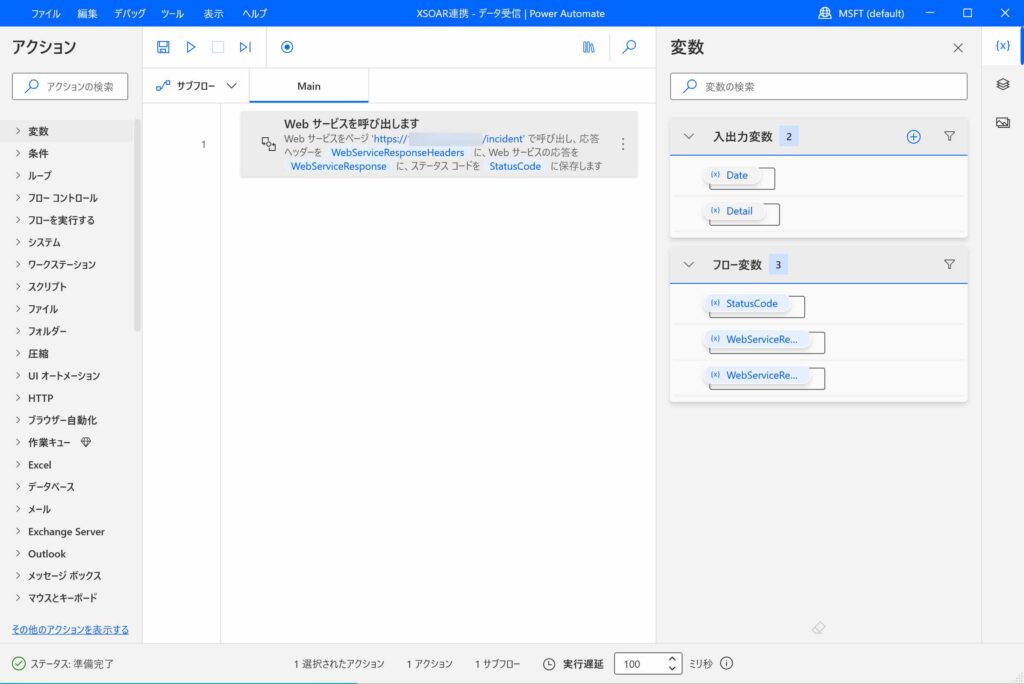

同様に「Date」という変数も設定したのが以下の状態です。

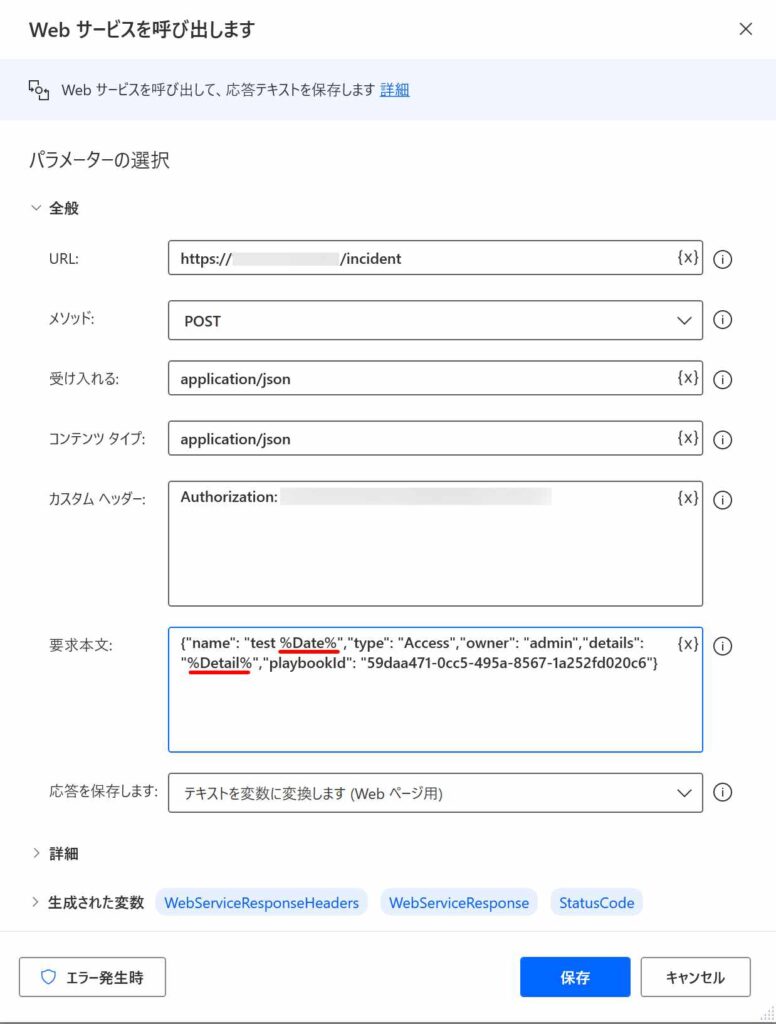

この後、実際にXSOARのAPIを実行している設定(上記画面の「Webサービスを呼び出します」)を開いて、先ほど作成した変数を下図の赤線の通り設定します。

なお、XSOAR上では、”test %Date%”がインシデント名、”Detail”がそのインシデントを開いた時の Detail の設定項目になります。

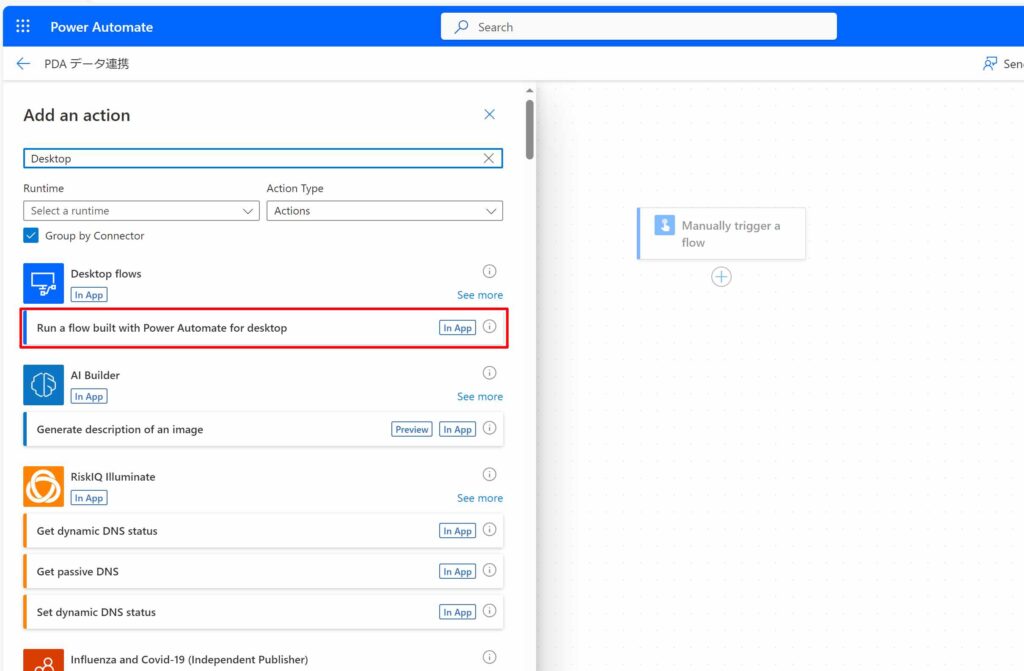

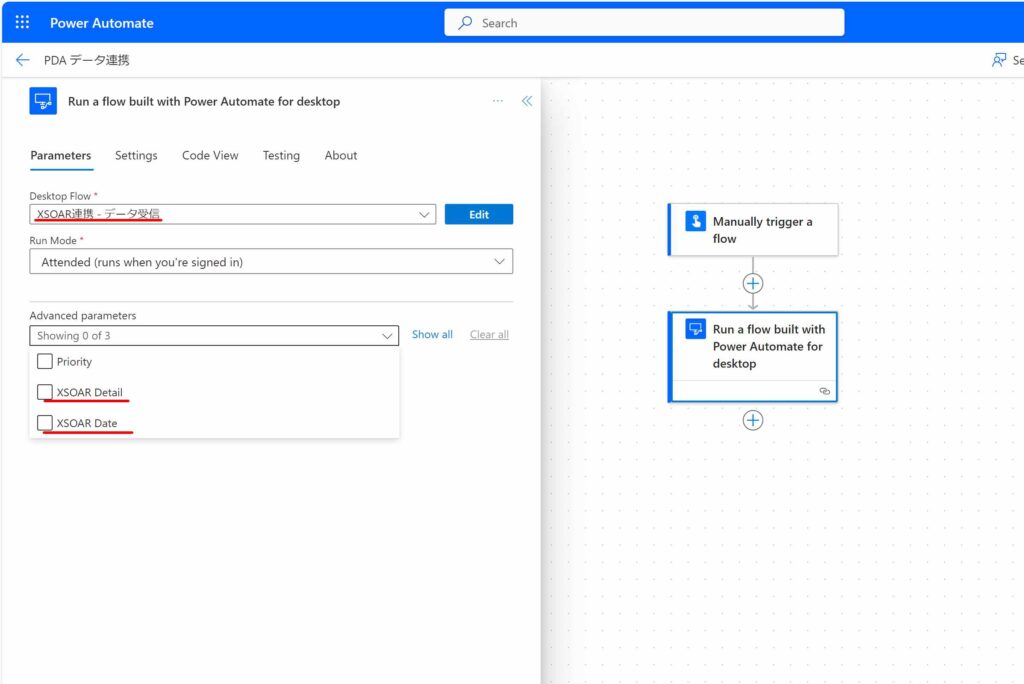

1.のPADの設定を行いセーブしたら(!)、Power Automate(クラウド)でのフローの作成に入ります。

フロー作成画面で”Desktop”で検索をかけると「Run a flow build with Power Automate for desktop」というアクションがあるので、それを選択します。

そしてその設定画面を開くと、下図のとおり一番上に「Desktop Flow」という項目があるので、その下のプルダウンから、先ほどPAD上で作成したフロー名(ここでは「XSOAR連携 – データ受信」)を選択します。

すると、その下にある「Advanced parameters」のプルダウンを開いた際に、先ほどPADで設定した変数(外部名)が表示されるので、それぞれチェックボックスで選択します。

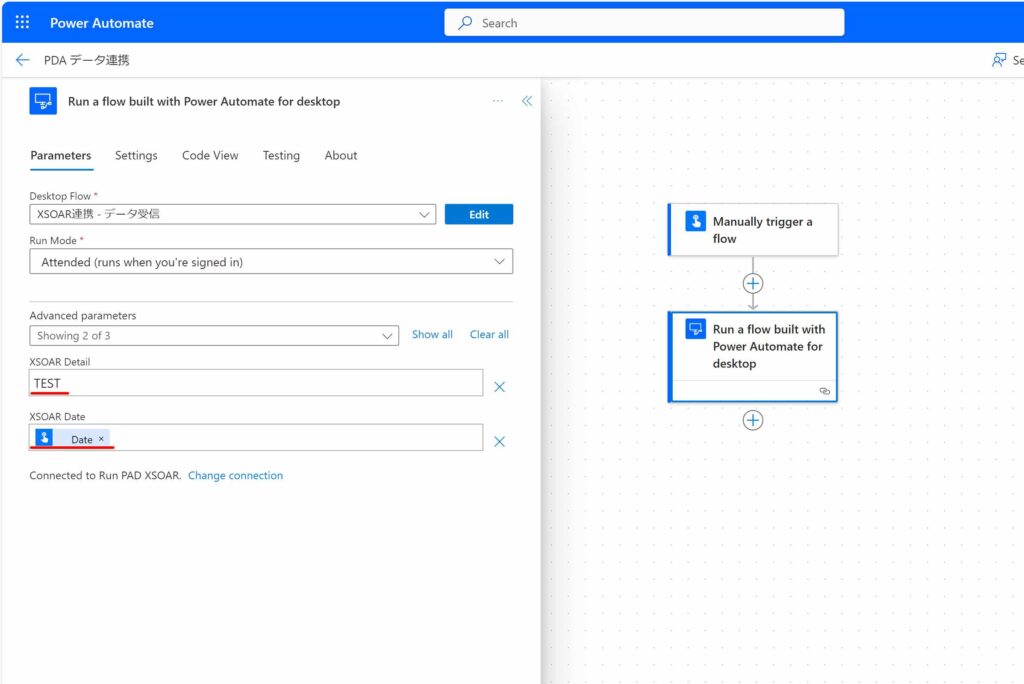

そして、それそれの変数に対して渡したいデータをテキストフィールドに書き込んでいきます。

ここでは「XSOAR Detail」には固定文字の”TEST”そ、「XSOAR Date」にはその時の日付を設定しています。

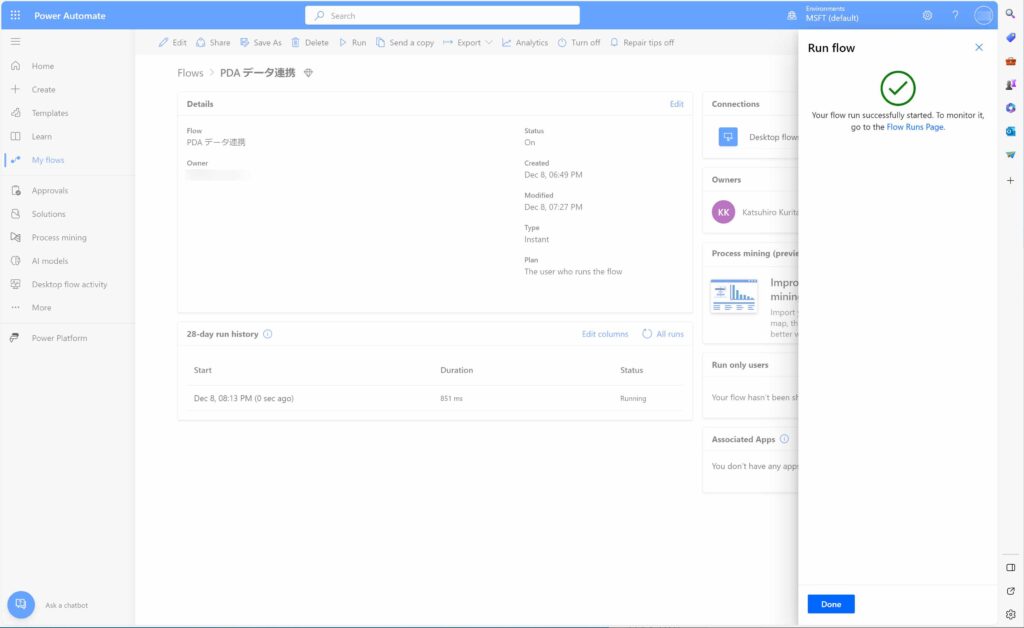

これで準備が整ったので、先ほど作成したPower Automate(クラウド)のフローを実行します。

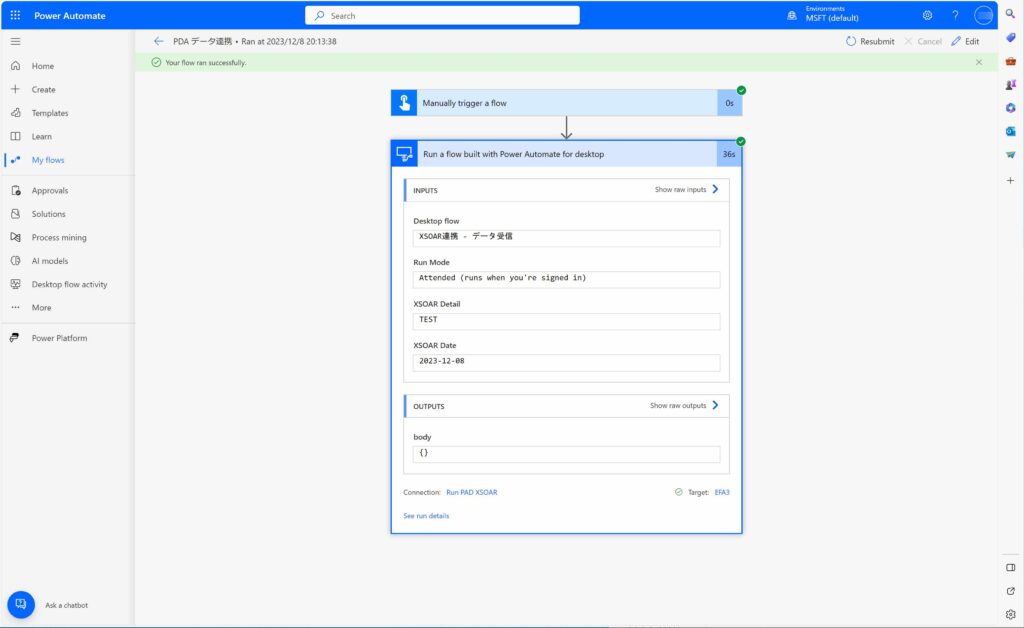

どうやらうまくいったようなので、念のため、Power Automate(クラウド)のフロー実行結果を確認してみると、ちゃんと変数が設定されて正常終了しているようでした。

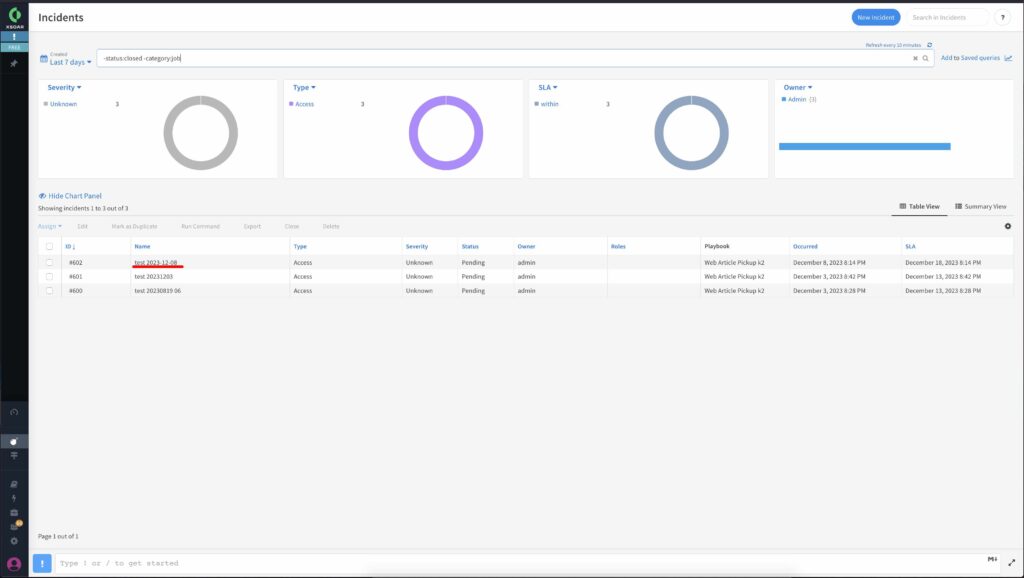

その後、XSOAR側で正常にインシデントが登録されているか確認してみました。

するとインシデント名のところには赤線の通り、%Date%で渡した今日の日付の入ったものが登録されていました。

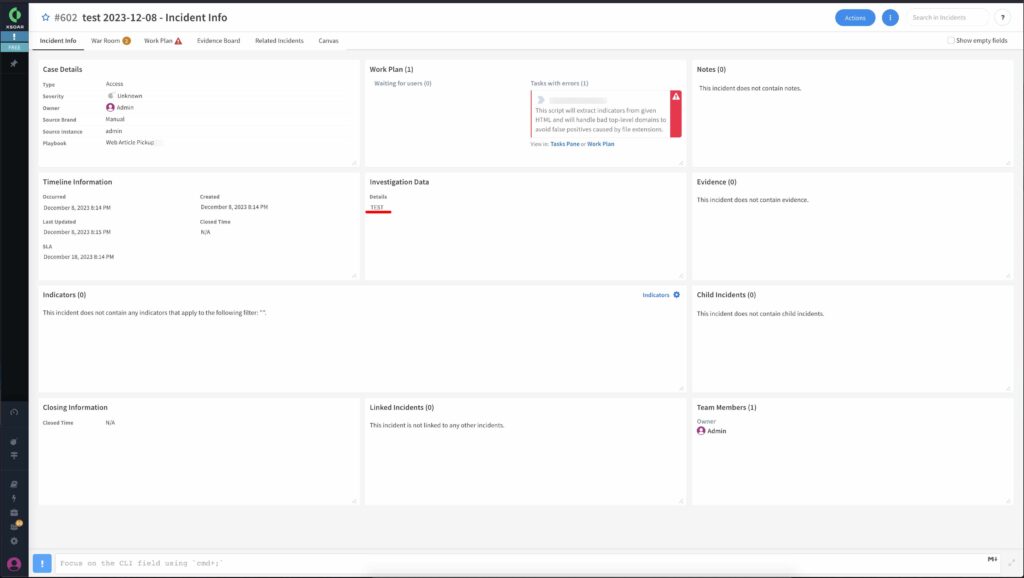

また、そのインシデントの中を見てみると、Detail の部分には%Detail%で渡した”TEST”という文字が登録されていることも確認できました。

会社で Teams と Power Automate を利用しているのですが、自宅でもこれらを使っていろいろ自動化のテストしてみたいと思っていました。

そこでインターネットで情報を漁っていたところ、Microsoft 365 開発者プログラムに参加すれば無料で使えると聞いたので、以下のサイトから申請してみました。

Microsoft 365 開発者プログラム https://developer.microsoft.com/en-us/microsoft-365/dev-program

上記画面の「Join now」から登録処理を進めます。

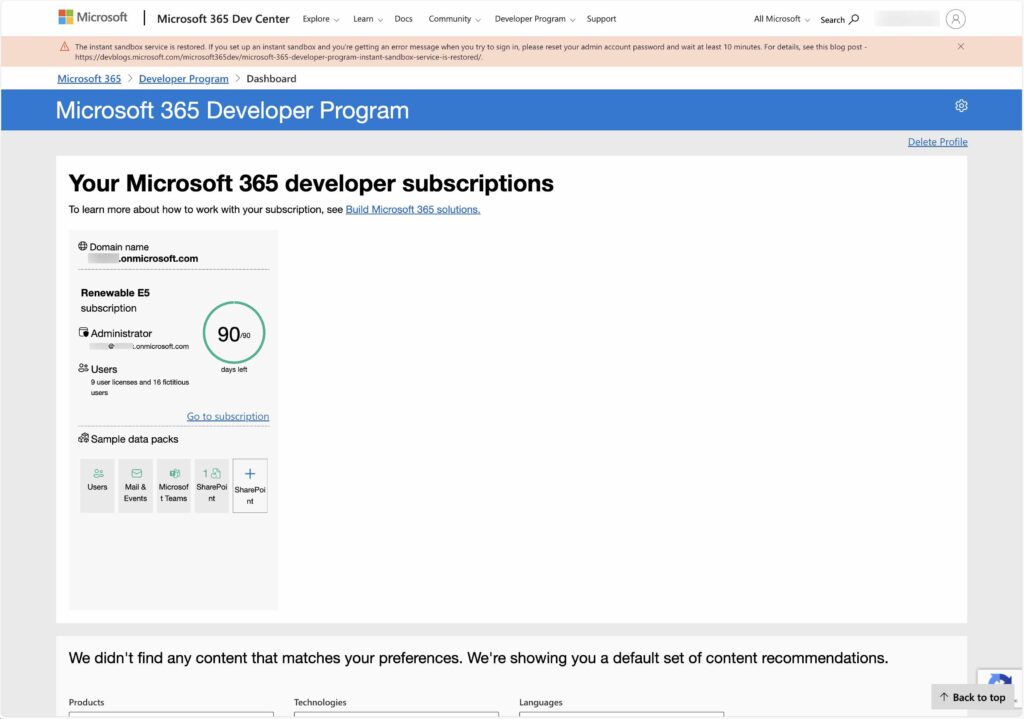

すると、以下の画面が表示され、左上の方に「xxx@xxx.onmicrosoft.com」というアカウントが発行されていることが確認できました。

このonmicrosoft.comのアカウントを使うと、以下の Power Apps を無料で利用申請することができるようなります。

Power Apps 無料で開始する https://powerapps.microsoft.com/ja-jp/landing/developer-plan/

上記サイトにアクセスすると「無料で開始する」というボタンがあるので、それをクリックすると以下の画面が表示されます。

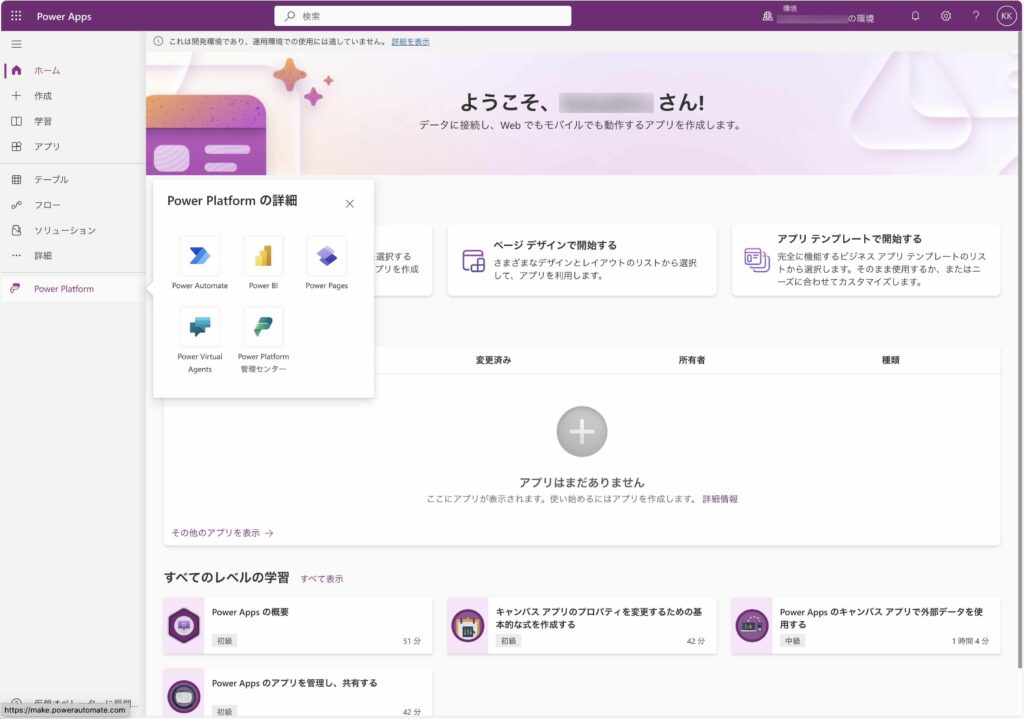

その後、処理をすすめると、以下の画面が表示されます。

この画面の左ペインに表示されている「Power Platform」を選択することにより、 Power Automateや Teams など利用可能になります。

Power Automate を利用して、Teams の特定のチャンネルに投稿されたメッセージを Slack に送信する処理を作成してみました。

なお、Power Automate については、Microsoft 365 Developer Program を使ってテストを行なっています。このあたりの話は別のブログで記載したいと思います。

Teams にて「インシデント対応」というチームの作り、その中の「一般」チャネルに何かメッセージが入力されたらそれを検知し、Slackのその内容を送信する想定です。

Power Automateにてフローを作成します。全体のフローは以下の通り6ステップとなっています。

単純に Teamsのメッセージを受け取って Slack に投げるだけなのでもっと少ないステップになりそうですが、HTMLをプレーンテキストに変換し、配列を文字列にし、さらに不要なカッコや改行を取り除いて Slackに送信しているのでこうなりました。

このあたりの処理は以外と難しかったので、あとでこのブログに記録の為に追記しておこうと思います。

以下、細かな処理を記載しておきます。(2023.12.3)

「変数を初期化する Teamsメッセージ」では、手前のタスクでプレーンテキスト化した Teamsのメッセージを変数 teams_array に設定しています。

なおこのときプレーンテキストが(なぜか)配列の形”[“と”]”に囲まれているので、Typeとしては Arrayとしています。

「変数を配列から文字列にする」では replace()関数をつかって、先ほどの teams_array の中身のTypeをArray から Stringの形にし、変数 teams_string に設定しています。

「変数を初期化する 改行コード」では、次のタスクで利用する為に改行コード”\n”を LFcode という変数に設定しています。

最後に「メッセージの投稿」では、変数 teams_string の中の改行コード”\n”を replace()関数を使用し(空白に)置き換えています。

以下が Slack の general チャンネルに Power Automateからメッセージを送信した結果です。

画面上の最初の方は Teams のメッセージを受け取ってそのまま Slackに投げたので HTMLとなっていますが、最後の方ではプレーンテキスト化し、”[“や改行”¥n”を取り除いてシンプルに URLだけが表示されるようになっています。

XSOARには Web APIが用意されていて、コマンドプロンプトから以下のようなコマンドを実施すると、”palybookId”で指定したプレイブックを起動したり、”details”で指定した情報をインシデント情報として記録できます。(プレイブックのインプットデータとしても活用可能)

% curl -kv 'https://XX.XXX.XX.X:XXXX/incident' -X POST -H 'Authorization: XXXXXXXXXX' -H 'Accept: application/json' -H 'Content-Type: application/json,application/xml' -d '{"name": "test 20231201","type": "Access","owner": "admin","details": "http://k2-ornata.com/%22,%22playbookId": "59daa471-0cc5-495a-8567-1a252fd020c6"}'

この情報を元に Power Automate for Desktopの「Webサービスを呼び出します」を使って、同じ様に XSOAR にアクセスしてみました。

curlのコマンド情報からから Webリスクエストを作成してくれるサイトはいくつかあるようですが、どれも今一つだったので、そういえばと思い ChatGPT に相談してみました。(別のコマンドを相談した時のものですが。)

上記は少し違うコマンドについて相談したときのものですが、かなりいい感じで答えてくれていることがわかります。

そこで 上記の ChatGPT の回答も踏まえながら、以下の通り各パラメータを設定してみました。

しかしながらすんなりとはいかず、SOARからの以下のようなレスポンスエラーが頻発しました。

{"id":"bad_request","status":400,"title":"Bad request","detail":"Request body is not well-formed. It must be JSON.","error":"unexpected EOF","encrypted":false,"multires":null}

こういったエラーが XSOAR から返ってくるようであれば、以下の3点を見直してみる必要があります。

<正しくWeb APIにアクセスするポイント>

1.Authorization: に認証情報が正しく設定されているか?

2.「要求本文」に記載したデータが正しく JSON フォーマットで描かれているか?

3.「詳細」設定にて以下の図の通り「要求本文をエンコードします」がOFFになっているか?

特に2.のJSONフォーマットはかなりシビアな感じなので、念入りに確認する必要があります。たとえば以下のように同じ”でくくっているように見えて実は”になっていたりするので要注意です。

"59daa471-0cc5-495a-8567-1a252fd020c6”

これらを修正することで、ようやく正常に XSOAR にアクセスすることができました。

最近、Youtube や ブログなどで CAMBLY の AI との英語レッスンがすごい!との噂を聞きました。

しかもその AI レッスンは、CAMBLY のアカウントを作成するだけで無料で使えるとのことなので、試しにアカウントを作成しました。その時の記録をここに残しておきます。

結論としては、アカウントを登録する際にクレジットカードの情報を入れる必要もなく、手軽に使えることがわかりました。

以下にアカウント作成時の手順を記載しておきます。

まず以下のサイトにアクセスします。

CAMBLY https://www.cambly.com/

するとCAMBLYのトップページが表示されますので、右上の「登録する」ボタンを押します。

アカウントの登録方法を選択する画面が表示されますので、好きな方法をアカウント登録を行います。

私は、お試しでアカウントを作成してみたいだけなので、右下のメールのアイコンを選択しました。

次にメールアドレスをパスワードを選択します。

最後に名前(ニックネーム)を登録します。

ここから2ページに渡り、個人の情報をいれていきます。

まずは自分の英語力についてです。

次に学習目標などを聞いてきます。

最後に勧誘の画面が表示されます。

ここは興味がなければどんどんスキップしていきましょう。

次も勧誘ですのでスキップします。

ここまでログインが完了したので、画面左下の「Camblyミニレッスン」を選択します。

するとAIとのレッスン画面に無事アクセスすることができました。

こらから実際に使ってみたいと思います。